ICCS intern Ivan Shalashilin writes about his experience.

During my internship at Cambridge University, I learned to apply Gaussian processes (GPs), a type of machine learning, to model ocean currents. Following my last week, I was invited to attend the Gaussian Processes Summer School hosted by the University of Manchester. This event gave me the opportunity to discover the wider context of applications of GPs, their uses in current research, and overall was a fantastic networking opportunity for me.

What are Gaussian Processes all about?

The first day saw a warm welcome from Dr Mauricio Alvarez, followed by introductions to GPs from Prof Richard Wilkinson and Dr Carl Henrik. The talks were easily accessible to anyone with an undergraduate background in statistics, and both were entertaining speakers. During the first computing lab session, we went over the basic techniques of using GPs for regression in the Python library GPTorch, with which I was comfortable. All GP models start with encoding prior assumptions about the function you are trying to learn in a kernel, and then choosing an appropriate likelihood that encodes your assumptions about how the measurements of the function are distributed. These are all fed into a posterior with tuneable parameters, which need to be optimised to maximise the value of the likelihood.

On the second day, Dr Vincent Adam explained how GPs differ from other machine learning techniques. It reinforced my understanding of how they provide excellent modelling given very sparse data, but have some unavoidable computational bottlenecks, namely integration and matrix inversion (dreaded by computer scientists, but not by mathematicians and physicists: they can just write down some symbols and don’t have to tediously calculate anything!). As a physicist, I was glad to hear that the resulting posterior model can be approximated by a Taylor expansion, a way of approximating functions with simpler ones, which works quite well when using complicated likelihoods.

Exploring Manchester

During the break times, I enjoyed exploring the Manchester University campus. I visited the city museum and there was also a lovely dinner organised for us at The Wharf pub. On my way there, I met a group of summer school attendees who discussed their research on using GPs to optimise fusion reactor design. It was exciting to see how the same technique was being used in a completely different area to what I had been working on over the summer. Similar discussions were picked up over dinner. The delicious food added to the friendly atmosphere, making it a great experience overall.

By far found the most interesting talk of the summer school was Dr Søren Hauberg’s lecture on combining GPs with differential geometry and topology. The idea was to use GPs to try and solve the identifiability problem: determining which unknown parameters of a mathematical model can be determined from known input-output data. The suggested solution? Lift the problem to a different mathematical space, then do your GP modelling in that space. In the toy problem outlined, the aim was to find the shortest possible path between points in this new space. For this he used some properties of the posterior model, specifically that it has an uncertainty at each point in space (just a number, don’t worry). Therein lies the surprising answer: the posterior uncertainty turns out to be a measure of closeness! If both points have a low uncertainty, they were nearby, and vice versa.

This type of thinking can be applied to controlling robots to move around obstacles and even in the identification of DNA. Dr Hauberg’s talk interested me the most because I immediately drew parallels to my undergraduate studies in physics and what is done under the hood in general relativity: metrics, charts, linear maps etc. Following the lecture, I was brimming with questions about how these constructs tie into his research, and subsequently had a very interesting discussion.

Where’s Wally?

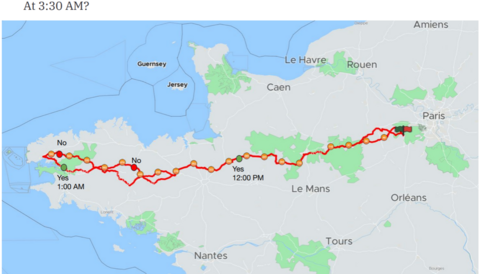

On the third day, my internship supervisor Dr Henry Moss explained how Bayesian optimisation (BO) is a way of using GPs to extremise functions which are expensive to evaluate. The idea is to evaluate the function by making educated guesses given your current data, maximising the information gain. Dr Moss used the example of fellow professor Dr Carl Henrik’s recent multi-day bike touring race in northern France. The aim was to use the smallest number of evaluations to maximise the knowledge of where he was at a given point in the race! Once the scene was set, Dr Moss discussed the applications of BO in his research. Namely, optimising the choice of molecular design such that they are useful, cheap to produce, and stable.

“What’s the next big thing in AI?”

The last session of the day involved an engaging and open-ended conversation with industry leader Prof. Neil Lawrence and Dr Mauricio Alvarez. They discussed a myriad of different topics: how AI/machine learning branched from statistics into its own field, important literature in the field, the history of GPs and other questions from attendees. I took the opportunity to ask: what the next big thing in AI? He responded that AI will be able to act on its input and learn from its actions in a feedback loop. He considered how animals evolve in response to their environment via a stimulus-response cycle. In comparison, he pointed out that machine learning algorithms typically only ‘receive stimulus’ in the form of isolated data, identify patterns, but don’t ‘act’ on the output. For example, an image classification algorithm can distinguish a cat from a car but doesn’t then know to pet the cat instead of the car!

The summer school was a fruitful follow-up to my research experience at Cambridge, and now I realise there is so much more to learn about Gaussian processes than I thought when I was concluding my project. There was also a pleasant social aspect to the school. It was a fantastic opportunity to meet the academic leaders in this field of research as well as like-minded young scientists who love learning as much as I do. As I go into my final year of physics at Imperial, I hope to carry on drawing parallels to the knowledge I gained from the research project and the summer school with my studies.